Datastage Frequently asked questions, Datastage Interview questions. datastage company interview questions, questions and answers, Real time scenarios, solved datastage jobs with examples,datawarehouse, datamart, lookups, join stage, Transformer, scd, type-scd, datastage tutorials, datastage tips, datastage online help

Thursday, 23 May 2013

Wednesday, 22 May 2013

Free DataStage Lab Exercises

Hey Guys Download Free DataStage Lab Exercises

DataStage+Lab+Exercises+Part-1

DataStage+Lab+Exercises+Part-2

DataStage+Lab+Exercises+Part-1

DataStage+Lab+Exercises+Part-2

Tuesday, 21 May 2013

Transformer Stage using Stripwhitespaces Function

Stripwhitespaces is the function used for the remove before,after and middle of the characters.

Some times we get the data as below

e_id,e_name

10,em y

20, j ul y

30,re v o l

40,w a go n

Take Job Design as

Se.File ------ Tx------D.s

Read and load the data in Sequential file stage

Go to Transformer stage

Here, we use stripwhitespaces function in the required column derivation.

You can write expression as below

Stripwhitespaces(e_name) for e_name

Click ok

Compile and Run the data

You will get the data after removal of all the spaces between the characters,

before and after spaces also.

Some times we get the data as below

e_id,e_name

10,em y

20, j ul y

30,re v o l

40,w a go n

Take Job Design as

Se.File ------ Tx------D.s

Read and load the data in Sequential file stage

Go to Transformer stage

Here, we use stripwhitespaces function in the required column derivation.

You can write expression as below

Stripwhitespaces(e_name) for e_name

Click ok

Compile and Run the data

You will get the data after removal of all the spaces between the characters,

before and after spaces also.

Transformer-Stage-Example

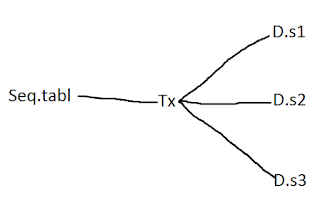

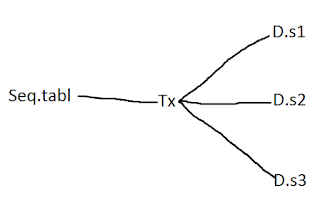

If our requirement is to filter the data department wise from the file below

samp_tabl

1,sam,clerck,10

2,tom,developer,20

3,jim,clerck,10

4,don,tester,30

5,zeera,developer,20

6,varun,clerck,10

7,luti,production,40

8,raja,priduction,40

And our requirement is to get the target data as below

In Target1 we need 10th & 40th dept employees.

In Target2 we need 30th dept employees.

In Target1 we need 20th & 40th dept employees.

Take Job Design as below

Read and Load the data in Source file

In Transformer Stage just Drag and Drop the data to the target tables.

Write expression in constraints as below

dept_no=10 or dept_no= 40 for table 1

dept_no=30 for table 1

dept_no=20 or dept_no= 40 for table 1

Click ok

Give file name at the target file and

Compile and Run the Job to get the Output

Read more about Transformer Stage with example

samp_tabl

1,sam,clerck,10

2,tom,developer,20

3,jim,clerck,10

4,don,tester,30

5,zeera,developer,20

6,varun,clerck,10

7,luti,production,40

8,raja,priduction,40

And our requirement is to get the target data as below

In Target1 we need 10th & 40th dept employees.

In Target2 we need 30th dept employees.

In Target1 we need 20th & 40th dept employees.

Take Job Design as below

Read and Load the data in Source file

In Transformer Stage just Drag and Drop the data to the target tables.

Write expression in constraints as below

dept_no=10 or dept_no= 40 for table 1

dept_no=30 for table 1

dept_no=20 or dept_no= 40 for table 1

Click ok

Give file name at the target file and

Compile and Run the Job to get the Output

Read more about Transformer Stage with example

What is Lookup stage? Uses of Look up stage?

It performs on dataset read into memory from any other parallel job stage that can

output data.

The main uses of the lookup stage is to map short codes in the input dataset onto

expanded information from a look up table which is then joined to the data coming from

input. For example, some we get the data with customers name and address. Here the

data identifies state as a two letters or three letters like mel for melbourne or

syd for sydney. But you want the data to carry the full name of the state by

defining the code as the key column. In this case lookup stage used very much.

It will reads each line, it uses the key to look up the stage in the lookup table.

It adds the state to the new column defined for the output link.

So that full state name is added to the each row based on codes given.

If the code not found in the lookup table, record will be rejected.

Lookup stage also performs to validate the row.

Look Up stage is a processing stage which performs horizontal combining.

Lookup stage Supports

N-Inputs ( For Norman Lookup )

2 Inputs ( For Sparse Lookup)

1 output

And 1 Reject link

Up to Datastage 7 Version We have only 2 Types of LookUps

a) Normal Lookup and b) Sparse Lookup

But in Datastage 8 Version, enhancements has been take place. They are

c) Range Look Up And d) Case less Look up

Normal Lookup:-- In Normal Look, all the reference records are copied to the memory and the primary records are cross verified with the reference records.

Sparse Lookup:--In Sparse lookup stage, each primary records are sent to the Source and cross verified with the reference records.

Here , we use sparse lookup when the data coming have memory sufficiency

and the primary records is relatively smaller than reference date we go for this sparse lookup.

Range LookUp:--- Range Lookup is going to perform the range checking on selected columns.

For Example: -- If we want to check the range of salary, in order to find the grades of the employee than we can use the range lookup.

output data.

The main uses of the lookup stage is to map short codes in the input dataset onto

expanded information from a look up table which is then joined to the data coming from

input. For example, some we get the data with customers name and address. Here the

data identifies state as a two letters or three letters like mel for melbourne or

syd for sydney. But you want the data to carry the full name of the state by

defining the code as the key column. In this case lookup stage used very much.

It will reads each line, it uses the key to look up the stage in the lookup table.

It adds the state to the new column defined for the output link.

So that full state name is added to the each row based on codes given.

If the code not found in the lookup table, record will be rejected.

Lookup stage also performs to validate the row.

Look Up stage is a processing stage which performs horizontal combining.

Lookup stage Supports

N-Inputs ( For Norman Lookup )

2 Inputs ( For Sparse Lookup)

1 output

And 1 Reject link

Up to Datastage 7 Version We have only 2 Types of LookUps

a) Normal Lookup and b) Sparse Lookup

But in Datastage 8 Version, enhancements has been take place. They are

c) Range Look Up And d) Case less Look up

Normal Lookup:-- In Normal Look, all the reference records are copied to the memory and the primary records are cross verified with the reference records.

Sparse Lookup:--In Sparse lookup stage, each primary records are sent to the Source and cross verified with the reference records.

Here , we use sparse lookup when the data coming have memory sufficiency

and the primary records is relatively smaller than reference date we go for this sparse lookup.

Range LookUp:--- Range Lookup is going to perform the range checking on selected columns.

For Example: -- If we want to check the range of salary, in order to find the grades of the employee than we can use the range lookup.

How to do Sorting without Sorting stage

You can do it as normal process first as follows.

If we want to read the data using Sequential File

Design as follows : -------------

Seq. File ------------------------Dataset File

To read the data in Sequential file

Open Properties of Sequential file

and give the file name.

Now you can give the file path, by clicking on the browse for the file.

And in Options select True ( If the first line is column name )

You can just leave the rest of the options as it is.

Now go to Column and click on load, then select the file you like to read from the

table definitions .

( This file should be same which you have given in the properties. )

Now in the Target Dataset - Give file name.

Now for the sorting process.

In the Target Open Dataset properties

And go to Partitioning ---- Select Partitioning type as Hash

In Available Columns Select Key Column ( E_Id for EXAMPLE) to be sorted.

Click Perform Sort

Click Ok

Compile And Run

The data will be Sorted in the Target.

If we want to read the data using Sequential File

Design as follows : -------------

Seq. File ------------------------Dataset File

To read the data in Sequential file

Open Properties of Sequential file

and give the file name.

Now you can give the file path, by clicking on the browse for the file.

And in Options select True ( If the first line is column name )

You can just leave the rest of the options as it is.

Now go to Column and click on load, then select the file you like to read from the

table definitions .

( This file should be same which you have given in the properties. )

Now in the Target Dataset - Give file name.

Now for the sorting process.

In the Target Open Dataset properties

And go to Partitioning ---- Select Partitioning type as Hash

In Available Columns Select Key Column ( E_Id for EXAMPLE) to be sorted.

Click Perform Sort

Click Ok

Compile And Run

The data will be Sorted in the Target.

Create a DataStage Parallel Job

Now that you understand how data is processed in DataStage, let us create a sample DataStage job

Step 1:

Creating a new job and save it

Step 2:

Understanding the requirement of the job

Requirement: Let’s say that the customer wants a DataStage jobs that reads employee data from the file Emp.txt (which is comma separated) and this data has to be written to another file Emp_Grade.txt.

Requirement: Let’s say that the customer wants a DataStage jobs that reads employee data from the file Emp.txt (which is comma separated) and this data has to be written to another file Emp_Grade.txt.

Input Data for the file à Emp.txt

| Emp_Name | Emp_Salary | Emp_Id | Emp_Grade |

| ABC | 10 | 1 | |

| DEF | 30 | 2 | |

| GHI | 20 | 3 | |

| JKL | 60 | 4 | |

| MNO | 100 | 5 |

Transformation: If the employee’s salary is greater than 50 the grade is ‘A’ else it is ‘B’

Now that we have the requirement, let’s create the job

Now that we have the requirement, let’s create the job

Step 3:

Adding Stages to the job canvas

Since we are reading data from a text file which is comma separated, we will use the sequential file stage (details about how to use each stage are elaborated in next lessons)

Since we are reading data from a text file which is comma separated, we will use the sequential file stage (details about how to use each stage are elaborated in next lessons)

All the Stages for DataStage are listed in the Palatte. The Palatte contain stages (icons) that can be dragged on to the canvas of DataStage

To add, click on the stage that we need (in our case a sequential file stage) and then click on the Job Canvas

As per our requirement we need to perform a transformation on the data that is extracted, so let’s take the Transformer Stage and add it to the canvas

Our output as per the requirement is again a text file, so let’s drag another Sequential File Stage to the canvas

Now that we have the stages in places, we have to create LINKS between each of these stages so that data flows from once stage to another. Links can be added in two ways to a job:-

- By using the link stage in the General Tab of the Palatte

- Right click on the Source Stage (Sequential_File_0), hold the mouse button and drag the link that is generated to the Target Stage (Transformer_1). – Easier way

We need 2 links in our job:

- One from the source Sequential File Stage (Sequential_File_0) to the Transformer Stage (Transformer_1) – DSLink3

- The 2nd from the Transformer Stage to the Target Sequential File Stage (Sequential_File_2) – DSLink4

A good practice is to make sure that the stages are aligned in such a way that data always flows from Left to Right

Step 4:

Editing the Stages to perform Extraction, Transformation & Loading operations

For the stages to read-write/transform data, we have to edit them and let them know what to do with the data.

For the stages to read-write/transform data, we have to edit them and let them know what to do with the data.

To edit a stage, we have double click on the stage in the Job Design. Each stage will have a unique properties window.

As per our requirement, we have to first read data from the file Emp.txt. So let us edit the sequential file stage (Sequential_File_0) to make it read the data in the file.

Editing a Sequential File Stage to read data from a CSV file

Editing a Sequential File Stage to read data from a CSV file

- Double click on the stage and the following window opens

- The above window that pops up has all the properties that can be defined for a particular stageIt has two pages

- Stage

- Output

In the Stage Page, we will be able to define advanced properties like Combinability Mode, Preserve Partitioning, Node Map Constraint etc., àThese options are used while debugging or during performance tuning, so for now we will leave them as they are.The Properties in the Output Page are the ones that are important for reading the required data. We have 5 tabs in the Output page- General: We can specify the description of the stage. This is basically to maintain readability so that future developers will know what this stage is doing

- Properties: In this tab we have to specify the path of the file that we intend to read and select the appropriate options that apply to our requirement

- Format: In Format tab, we specify the record and field delimiters and other properties like null handling etc.

- Columns: Here we define the column names and the corresponding meta data for each column

- Advanced: Will have details of the buffering mode and is usually left as it is.

So, first let us enter the path of the source file under the Property “File“. Also notice that the first line of the input file has column names. So under the options section in Properties tab, we will have the Property “First Line is Column Names” set to True. We will leave the other properties as they are as we have nothing in the requirement that warrants a change in them.Now lets’ go to the “Format” tab. If you notice below, we have two sections – Record level and Field defaults

Record level – setting apply to each record in the input data

Field defaults – apply to each column/field in a recordFor out requirement, the record level delimiter if end (so we leave it as it is) and field delimiter is comma (which also we leave as it is). Note that in “Field defaults” we have another property called “Quote” which is set to double. This is used when our input data has double quotes for each field. Since we don’t have it in our input data we select the property and click on the delete button on our keyboard to remove it.One additional property that we need to set at the field level is “Null field value” (available under the Field defaults options). This is because in our input file the column Emp_Grade is NULL, this may result in the Sequential file stage dropping the record. To avoid such a scenario, we usually select the Null field value property and set it to empty (”) i.e., one open single quote and one close single quote without any space between the quotes.Now that the file and the delimiters are setup, let us enter go to the columns tab to enter the Meta-data for reading the input file. Below is the screen shot showing column names and the data types for each of them; entered as per our requirement.Now go back to the “Properties” tab and you will find that the “View Data” button on the right corner of the stage is enabled. Click on the button to see if the data is being fetched correctly from the file. If it is then the following should be displayedSince the data looks fine. Click “Close” on the popup and then click on OK on the stage properties to save the changes done.

Moving to the Transformer Stage:

Since the scope of this lesson is limited to creating a simple job, I will not dwell into the details of the Transformer stage. As per our requirement, we have to have the grade of the employee as “A” if his salary id greater than 50 and it is “B” if less than 50

Here is a screen shot of the transformer stage

The left half is the Input section and the right half is the output section. If you observer, the columns that we defined in the sequential file stage are visible in the left half. This is because of the link that we had between the stages. To have the data flow from input to output, we have to select all the columns, drag and drop them to the output link, i.e., DSlink4 box. Here is a screen shot

In the output part of the transformer stage we have derivation (explained in detail under the lesson “Transformer Stage”). Double click on the derivation of EMP_Grade and replace “DSLink3.Emp_Grade” with If DSLink3.Emp_Salary>50 then ‘A’ else ‘B’

If there are any errors in the derivation it will be shown in red otherwise it will be normal (blue).

Editing the Output Sequential File:

In the “Properties” tab enter the path and name of the output file and change the property “First Line is column names” to “True”

In the “Properties” tab enter the path and name of the output file and change the property “First Line is column names” to “True”

In the Format tab, remove the Quote property and click “Ok” to save the property changes to the stage.

We are now done with coding part. So now we have to save our job as “FirstJob” (explained in lesson “DataStage Job“). Once the job is saved, like in any other programming language we have to compile the job.

Click on the Compile button on the menu to compile the job. If successful the following message will be displayed

Step 5:

Running the Job – DataStage Director

Open DataStage Director and navigate to the folder in which the job is saved.

Open DataStage Director and navigate to the folder in which the job is saved.

Select the job that we just created and click on the run button ( ) and the following popup will be displayed

) and the following popup will be displayed

In the popup, click on the run button to start running the DataStage job. When we run the job Extraction, transformation and loading activities that we developed in the designer will be executed giving us the required output data.

Note that in case of any issue with the job the status in the Director will be “Aborted”. For successful runs it will be “Finished” or “Finished (see log)”.

Our job finished successfully, so here is the output file that was generated

Subscribe to:

Comments (Atom)